Recurrent Neural Networks

Compared to vanilla neural networks, there are two things we need to know first about rnn:

-

A recurrent neural network is a neural network that is specialized for processing a sequence of values . Recurrent networks can scale to much longer sequences.

-

Most recurrent networks can also process sequences of variable length.

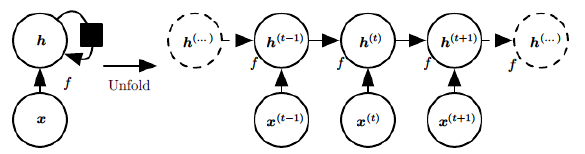

Overview: A Simple RNN And The Computational Graph

Essentially, any function involving recurrence can be considered a recurrent neural network. Many recurrent neural networks use Eq. 1 or similar equation to define the values of their hidden units.

Most RNN may have a output layer, where could be the softmax function for classification tasks.

The Challenges of Long-Term Dependencies

The Vanishing Gradient problems

Let disscuss this problem under a more realistic RNN formulation:

where is the shared hidden layer parameter, is the input transformation paramter, and the is the output softmax parameter.

Let be the total error, and be the error at a specified time step . Let be the total time step, then

Apply chain rule to ,

Consider ,

is the Jacobian Matrix of .

means the -th element in vector . Note, according to the definition of Jacobian, Given vector with length and with length , the Jacobian is a matrix.

Now consider the derivative of each element of the matrix , because

where means the element at the -th raw, -th column of matrix . The shapes of are respectively.

Note, is a pointwise non-linearty. Finally,

where

Analyzing the norm of the Jacobians,

where are the upper bounds of the norms of . So,

which could easily become very large or very small.

Vanishing gradient could actually do harm to learning process. The error at a time step ideally can tell a previous time step from many steps away to change during backprop. When vanishing gradient happens, information from time steps far away are not taken into consideration.In language modelling training, given sentence:

Jane walked into the room. John walked in too. It was late in the day. Jane said hi to (?)

We can easily find out that the qustion mark should be Jane, however this Jane depends on the word 16-time-step away. It is hard for

the error signal to flow that long range of time steps.

Multiple Time Scales

One way to deal with long-term dependencies is to design a model that operates at multiple time scales, so that some parts of the model operate at fine-grained time scales and can handle small details, while other parts operate at coarse time scales and transfer information from the distant past to the present more efficiently.

-

Adding Skip Connections through Time: add direct connections from variables in the distant past to variables in the present.

-

Leaky Units: When we accumulate a running average of some value by applying the update the parameter is an example of a linear self-connection from to . Leaky units are hidden units with linear self-connections. A leak unit in rnn could be represented as The standard RNN corresponds to , while here different values of were randomly sampled from , allowing some units to react quickly while others are forced to change slowly, but also propagate signals and gradients further in time. Note that because , the vanishing effect is still present (and gradients can still explode via ), but the time-scale of the vanishing effect can be expanded.

-

Remove Connections: remove length-one connections and replace them with longer connections

LSTM

Like leaky units, gated RNNs(long short-term memory and networks based on the gated recurrent unit etc.) are based on the idea of creating paths through time that have derivatives that neither vanish nor explode. Leaky units did this with connection weights that were either manually chosen constants or were parameters. Gated RNNs generalize this to connection weights that may change at each time step(conditioned on the context).

A LSTM unit consists of a memory cell , an input gate , a forget gate , and an output gate . The memory cell caries the memory content of a LSTM unit, while the gates control the amount of changes to and exposure of the memory content. The content of the memory cell at time-step is update similar to the form of a gated leaky neuron.

where is the candidate memory:

Gates are all sigmoid functions of affined transformation on current input and last hidden state

The final hidden state of could be computed as

where

GRU

GRU is more like a leaky unit, except that now becomes trainable and depend on the context.

where is the new candidate memory

are called update gate, are called reset gate.

Trick for exploding gradient: clipping trick

The intuition is simple, we set a threshold to the gradient, make the absolute value of gradient no larger than the threshold.

if math.abs(gradient) > threshold:

gradient = threshold if gradient > 0 else -threshold

Reference

- Bengio et al. Deep Learning, chapter 10 Sequence Modeling: Recurrent and Recursive Nets

- Bengio Advances in optimizing recurrent networks

- Socher cs224d classnotes

- Bengio et al. Gated Feedback Recurrent Neural Networks