A Neural Network Factoid Question Answering over Paragraphs

[paper]

This paper note is for group sharing.

Novelty and Contribution

- Text classification based methods based on manually defined string matching or bag of words representations are ineffective on questions with very few individual words(eg. named entities), this paper introduce a dependency tree recursive neural network(DT-RNN) model that can reason over such questions by modeling textual compositionality;

- Unlike recurrent neural network, DT-RNNs are capable of learning word and phrase-level representation and thus are robust to similar sentences with slightly different syntax, which is ideal for the problem domain.

Model details

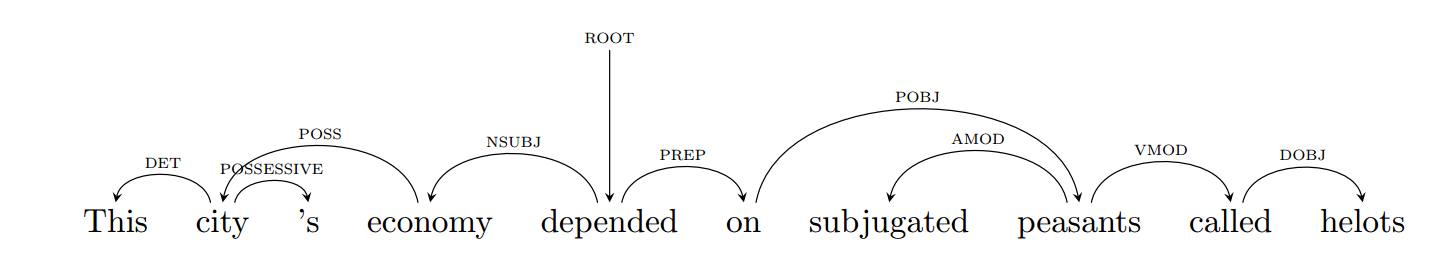

We will show how DT-RNN works through the dependency parse of a sentence from a question about Sparto showed above.

Some prerequisites

- For each word in our vocabulary, we associate it with a vector representation , These representations are stored as columns in an dimensional word embedding matrix , where is the size of the vocabulary;

- Each node in the parse tree is associated with a word , a word vector , and a hidden vector , For internal nodes, this vector is a phrase-level representation, while at leaf nodes it is word vector mapped into the hidden space, because unlike consituency trees where all words reside at the leaf level, internal nodes of dependency trees are associated with words, the DT-RNN has to combine current node’s word vector its children’s hidden vectors to form , this process continues recursively up to the root, which represents the entire sentence.

- A matrix to incorporate the word vector into ;

- For each dependency relation(the DET, POSSESSIVE and POSS symbol in Figure 1) , we associate a separate matrix .

Model

Given parse tree(Figure 1),

we first compute leaf representations. For example, the hidden representation is where f is a non-linear activation such as and is the bias term.

Once all leaves are finished, we move to the interior nodes. Continuing from “helots” to its parent, “called”, we compute:

Repeat this process up to the root, which is and this is the representation of the entire sentence.

The composition equation for any node with children and word vector is where is the dependency relation between node and child node .

Training

Intuitively, we want to encourage the vectors of questions to be near their correct answers and far away from incorrect answers. This goal is accomplished with a contrasive max-margin object function.

For the problem domain, answers themselves are words in other questions, so the answer can be trained in the same feature space as the question text, enabling us to model relationships between answers instead of assuming incorrectly that all answers are independent (I don’t think it’s neccesary to model this kind of relation between answers, because as the paper quoted, they don’t need a ranked list of answer, any answer that does’t match the predefined answer list is treated wrong. And we can’t even be sure what will be captured in the embedding vector. However this maybe helpful in other domain where we model the output as independent catogories while they are not.)

- Given a sentence paired with its correct answer , we randomly select incorrect answers from the set of all incorrect answers and denote this subset as .

- since is part of vocabulary, it has a word vector , and incorrect answer is also associated with a vector .

- we define to be the set of all nodes in the sentence’s dependency tree, where an individual node is associated with the hidden vector .

The error for the sentence is where provides the rank of the correct answer with respect to the incorrect answers . we transform this rank into a loss function to optimize the top of the ranked list,,

| Since is expensive to compute. We approximate it by randomly sampling in correct answers until a violation is observed(x_c \cdot h_s < 1 + x_z \cdot h_s) and set $$rank(c,s,z) = ( | Z | - 1)/K$$. |

The model minimized the sum of the error over all sentences normalized by the number of nodes in the training set,

From Sentences to Questions

The model we have just described considers each sentence in a quiz bowl question independently. However, previously-heard sentences within the same question contain useful information that we do not want our model to ignore. While past work on rnn models have been restricted to the sentential and sub-sentential levels, we show that sentence-level representations can be easily combined to generate useful representations at the larger paragraph level.

The simplest and best aggregation method is just to average the representations of each sentence seen so far in a particular question. This method is very powerful and performs better than most of our baselines. We call this averaged DT-RNN model QANTA: a question answering neural network with trans-sentential averaging.

Decoding

To obtain the final answer prediction given a partial question, we first generate a feature representation for each sentence within that partial question. This representation is computed by concatenating together the word embeddings and hidden representations averaged over all nodes in the tree as well as the root node’s hidden vector. Finally, we send the average of all of the individual sentence features as input to a logistic regression classifier for answer prediction. The paper didn’t mention how this classifier is trained.